Validating the Privacy of a Decentralised Medical Image-to-Image Translation Framework through Security Attacks

- chair:Medical Imaging for Modeling and Simulation

- type:Master thesis

- tutor:

- person in charge:

Motivation

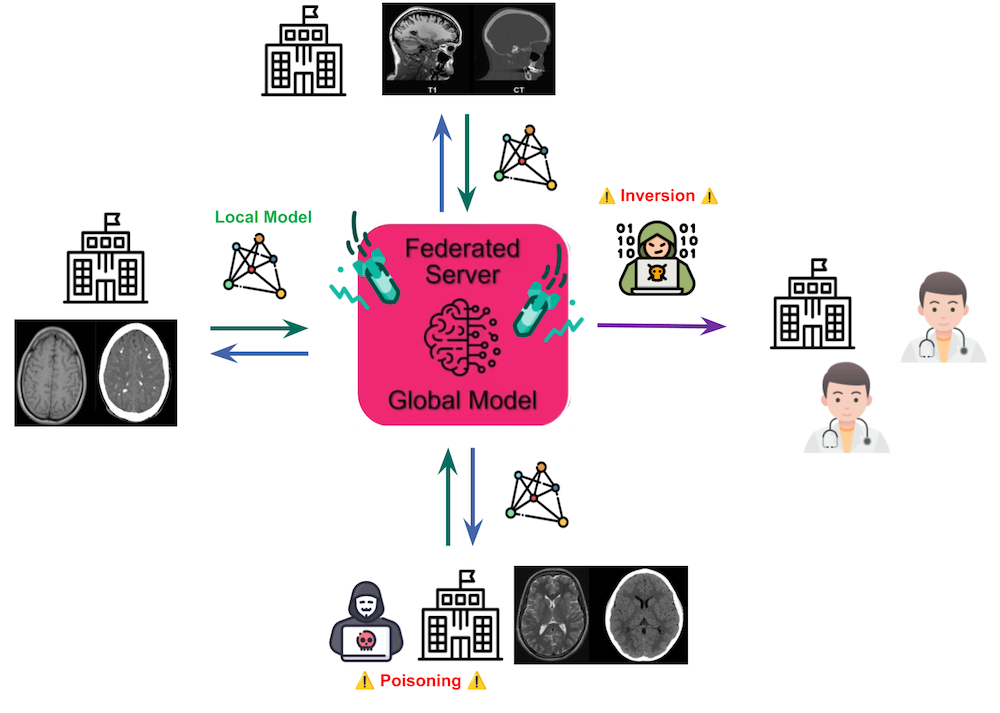

Federated Learning (FL) is an innovative approach to train artificial intelligence models that can be particularly useful in the medical field, where privacy is of critical importance. Although FL offers many privacy benefits by allowing models to be trained without centralising data, it also introduces new vulnerabilities. Several types of attacks can compromise both the integrity of the model and the privacy of personal information. A FL framework that decentralises the training of a model for the translation of Magnetic Resonance Imaging (MRI) images into synthetic Computed Tomography (CT) scans was built in the last months. A preliminary assessment of the methodology indicated its feasibility for implementation in a clinical context.

How can this federated approach be validated to ensure the protection of patient confidentiality, thereby enabling its deployment in real-world settings?